I’m visiting a Pentaho customer right now whose current “transaction” volume is 200 million rows per day. Relatively speaking, this puts their planned warehouse in the top quintile of size. They will face significant issues with load times, data storage, processing reliability, etc. Kettle is the tool they selected and it is working really well. Distributed record processing using Kettle and a FOSS database is a classic case study for Martens scale out manifesto.

This organization doesn’t have unlimited budget. Specifically, they don’t have a telecom type budget for their telecom like volume of data. One of the issues that has come up with their implementation has been the tradeoff between space, and keeping the base level fact records. For example, at 200 million / day and X bytes per fact you start to get into terabytes of storage quickly. It was assumed, from the start of the project, only summary level data could be stored for any window of time exceeding 10 days or so.

The overall math is sound.

Size per record (S) x number of records per day (N) = size per day of data growth (D)

In this equation, there’s really not much we can do, if we want to keep the actual base level transaction, about the number of records per day. N becomes a fixed parameter in this equation. We do have some control over the S value, which is mostly about what this blog is about.

Can we reduce the size of S by such an amount that D becomes more realistic? The answer is the ARCHIVE engine in MySQL.

I created the DDL for a typical fact record. Few Dimension IDs and a few of numeric values.

CREATE TABLE test_archive

(

DIM1_ID INT

, DIM2_ID INT

, DIM3_ID INT

, DIM4_ID INT

, DIM5_ID INT

, NUMBER1 FLOAT

, NUMBER2 FLOAT

, NUMBER3 FLOAT

, NUMBER4 FLOAT

) engine=ARCHIVE

;

I did this in MyISAM as well

CREATE TABLE test_myisam

(

DIM1_ID INT

, DIM2_ID INT

, DIM3_ID INT

, DIM4_ID INT

, DIM5_ID INT

, NUMBER1 FLOAT

, NUMBER2 FLOAT

, NUMBER3 FLOAT

, NUMBER4 FLOAT

) engine=MyISAM

;

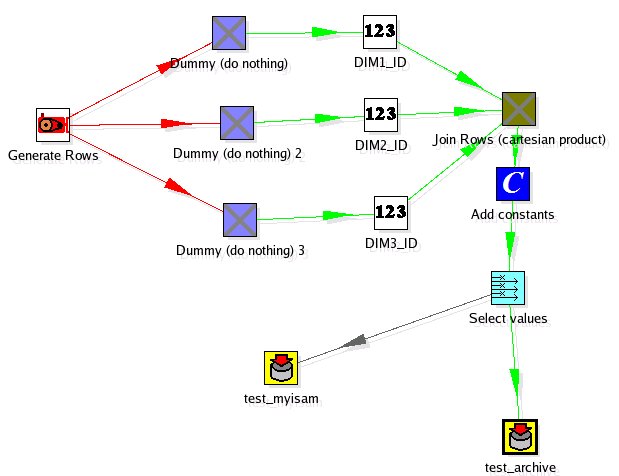

I used a simple Kettle transformation to populate both the these tables with 1 million rows of data. It wasn’t scientific but the INSERT performance of the Archive and MyISAM tables were very similar (within 10% of the throughput of rows).

So now we have a million rows, with a reasonable FACT record format. How much space do these 1 million rows require to store?

+--------------+---------+---------------+------------+

| table_name | engine | total_size_mb | table_rows |

+--------------+---------+---------------+------------+

| test_archive | ARCHIVE | 2.63 | 1000000 |

| test_myisam | MyISAM | 36.24 | 1000000 |

+--------------+---------+---------------+------------+

The Archive table uses an order of magnitude LESS space to store the same set of FACTS. What about query performance? I’ll have to do another blog on a more scientific approach but the anecdotal query performance on typical OLAP queries (select sum() from fact group by dim1_id) seemed related (less than a 15% difference).

Let’s be clear here, one MUST have a good aggregate table strategy so the fact records are RARELY accessed because the performance will not rock your world. However, this is the case with these volumes anyhow. Summaries and Aggregates have to be built for the end user to have a good experience on the frond end.

Archive engine is strange. INSERT/SELECT only. You can’t do ANYTHING with it, except drop and recreate it. For historical fact loads on “settled” DW data segmented on a daily basis this is usually not an issue. No transactions need, no DML needed. I’d also like to see how this compares to “packed” MyISAM tables at some point. I’m guessing I wouldn’t expect to see it beat the compression in terms of storage space, but there’s some nice things you can do with MyISAM tables (for instance, MERGE).

Back of the napkin calculation to handle BIG data volume:

2.63 MB / million * 200 per day = 526 MB / day

526 MB / day * 365 days = 187.5 GB

Anyone else have fun experiences to share with the Archive engine?

Side Note: I did all this testing on a VNC Server enabled EC2 instance I use for testing. Fire it up, VNC in, do my testing, shut it down. I LOVE EC2!

Hi!

This is with 5.0 right? In 5.1 Archive’s reading got a lot faster and its insertion improved as well (especially with tables that have lots of nulls). I spent a little time with an 8way machine making sure archive would work with multiple processors well.

Archive does not have update/delete for reasons of market. I was asked not to implement them. I’ve got it on paper to do it, but its not really high on my list at the moment (the new skip indexes are though, which will make scans faster).

Cheers,

-Brian

Brian, does it work in combination with table partitioning as well?

if loaded one day’s data which is wrong, how can we reload it? because we can’t use delete or update.

Margie –

You can’t (from a DML perspective), but you can from a logical perspective by:

DROP TABLE FACT_DAY_01_02_2007;

CREATE TABLE FACT_DAY_01_02_2007

(

DIM1_ID INT

, DIM2_ID INT

, DIM3_ID INT

, DIM4_ID INT

, DIM5_ID INT

, NUMBER1 FLOAT

, NUMBER2 FLOAT

, NUMBER3 FLOAT

, NUMBER4 FLOAT

) engine=ARCHIVE

;

and then rerun your ETL. Archive tables bear a certain burden, but they can be worthwhile. Admittedly, I’ve not heard how this customer did with it operationally so I don’t know how it worked for them on a day to day basis. 🙂

Let’s take this data warehouse example one step further.

Suppose your example company wanted to keep the data in the archive table for NO MORE than 90 days.

How do you roll off the 91-day old data without access to DELETE?

If the answer to this question is obvious, please accept my apology and even a terse link would save me any more time. Thanks! MySQL rocks, and looking to make great use of Archive.

(I’m thinking it MUST be obvious… I see so many great example blog posts about Archive Engine and logging… but they all dance around the logrotate functionality).

Haven’t done it yet, but I assume the answer is to create a new table periodically, selecting the rows that are 90 days old or less from the current table.