Kettles secret in-memory database is

- Not actually secret

- Not actually Kettles

There. I said it, and I feel much better. 🙂

In most circumstances, Kettle is used in conjunction with a database. You are typically doing something with a database: INSERTs, UPDATEs, DELETEs, UPSERTs, DIMENSION UPDATEs, etc. While I do know of some people that are using Kettle without a database (think log munching and summarization) a database is something that a Kettle developer almost always has at their disposal.

Sometimes there isn’t a database. Sometimes you don’t want the slowdown of persistence in a database. Sometimes you just want Kettle to just have an in memory blackboard across transformations. Sometimes you want to ship an example to a customer using database operations but don’t want to fuss with database install, dump files, etc.

Kettle ships with a Hypersonic driver, and therefore, has the ability to create an in memory database that does (most) everything you need database wise.

For instance, I’ve created two sample transformations that use this in-memory database.

The first one, kettle_inprocess_database.ktr, loads data into a simple table:

The second one, kettle_inprocess_database_read.ktr, reads the data back from that simple table:

To setup the database used in both of these transformations, which has no files, and is only valid for the length of the JVM I’ve used the following Kettle database connection setup.

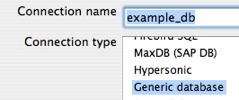

I setup a connection named example_db using the Generic option. This is so that I have full control over the JDBC URL.

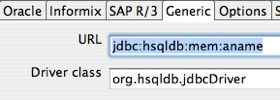

I then head to the Generic tab and input by URL and Driver. Nothing special with the driver class, org.hsqldb.jdbcDriver that is just the regular HSQLDB driver name. The URL is a little different then usual. The URL provided tells hypersonic to use a database in-memory with no persistence, and no data fil.e”

Ok, that means the database “example_db” should be setup for the transformations.

Remember, there is NOTHING persistent about this database. That means, every time I start Kettle the database will have no tables, no rows, no nothing. Some steps to run through this example.

- Open kettle_inprocess_database. “Test” the example_db connection to ensure that I / you have setup the in-memory database correctly.

- Remember, nothing in the database so we have to create our table. In the testing table operator, hit the SQL Button at the bottom of the editor to generate the DDL for this smple table.

- Run kettle_inprocess_database and verify that it loaded 10 rows into testingtable.

- Run kettle_inprocess_database_read and verify that it is reading 10 rows from the in-memory table testingtable.

I should note that using this approach isn’t always a good idea. In particular there’s issues with memory management, thread safety, it definitely won’t work with Kettles clustering features. However, it’s a simple easy solution for some circumstances. Your mileage may vary but ENJOY!

out of the topic question:

do you think kettle can handle asn.1 format files ?

It should also be possible to create an in-memory database using the SQLite connector. Instead of an actual file name use

:memory:. I use SQLite in-memory databases embedded in Excel for a lot of micro-ETL tasks.Tom,

You are correct. These exact transformations above run on SQLite equally well.

All I did was change the Generic tab to:

URL : jdbc:sqlite:memoryCLASS : org.sqlite.JDBC

And it works the same way! Thanks for pointing that there’s actually TWO in memory databases that ship with Kettle! 🙂

Nicholas,

I’ve read your BI articles for a while now but tended to skip the Pentaho entries. I got the opportunity to sit through a presentation on Pentaho at ODTUG this week so now I have to go back over your older articles.

This is a really slick package. I’m thinking of starting with the ETL package. I’m drawing a blank on the name at the moment. Data Integrator is what’s in my head but that’s an Oracle tool.

Anyway, I have a lot of experience with OWB and the Pentaho tool looks quite a bit like it (although I relaize it works quite differently).

LewisC

The database we use for the Kettle unit tests is actually Apache Derby, so you have Hypersonic, SQLite, Derby and then there’s of-course Hypersonic2 (H2).

All these can be used for in-memory purposes altough they vary in quality 🙂

Lewis: from the bottom of my heart: I really hope Kettle doesn’t even remotely look like OWB.

Amihay, ASN.1 is a programming language, only by streching the definition of “file format” it can be called a file format. If you feel like implementing support for it, you can write your own plugin, but we don’t support it out of the box.

Matt

Nick,

This will come in really handy for me. Good to know. Nice example. Thanks

Just in case anyone else finds this article and wants to know how to do H2 memory, set the host to localhost and the database to “mem:db” without the quotes.