At yesterdays Eigenbase Developer Meetup at SQLstream‘s offices in San Francisco we arrived at a new logo for LucidDB. DynamoBI is thrilled to have supported and funded the design contest to arrive at our new mascot. Over the coming months you’ll see the logo make it’s way out to the existing luciddb.org sites, wiki sites, etc. I’m really happy to have a logo that matches the nature of our database – BAD ASS!

At yesterdays Eigenbase Developer Meetup at SQLstream‘s offices in San Francisco we arrived at a new logo for LucidDB. DynamoBI is thrilled to have supported and funded the design contest to arrive at our new mascot. Over the coming months you’ll see the logo make it’s way out to the existing luciddb.org sites, wiki sites, etc. I’m really happy to have a logo that matches the nature of our database – BAD ASS!

Category Archives: DynamoBI

DynamoDB: Built in Time Dimension support!

DynamoDB (aka LucidDB) is not just another column store database. Our goal is being the best database for actually doing Business Intelligence; while that means being fast and handling large amounts of data there’s a lot of other things BI consultant/developers need. I’ll continue to post about some of the great BI features that DynamoDB has for the modern datasmiths.

First feature to cover that’s dead easy, is the built in ability to generate a time dimension, including a Fiscal Calendar attributes. If you’re using Mondrian (or come to that, your own custom SQL on a star schema) you need to have a time dimension. Time is the most important dimension! Every OLAP model I’ve ever built uses one! It something that you, as a datasmith will need to do with every project; that’s why we’ve built it right into our database.

Here’s a dead simple way to create a fully baked, ready to use Time Dimension to use with Mondrian.

-- Create a view that is our time dimension for 10 years, with our -- Fiscal calendar starting in March (3) create view dim_time as select * from table(applib.fiscal_time_dimension (2000, 1, 1, 2009, 12, 31, 3));

OK, that’s it. You’ve created a Time Dimension! * see NOTE at end of post.

So, we’ve created our time dimension, complete with a Fiscal calendar for 10 years in a single statement! Awesome – but what does it contain?

-- Structure of new time dimension select "TABLE_NAME", "COLUMN_NAME", "DATATYPE" from sys_root.dba_columns where table_name = 'DIM_TIME'; +-------------+---------------------------------+-----------+ | TABLE_NAME | COLUMN_NAME | DATATYPE | +-------------+---------------------------------+-----------+ | DIM_TIME | FISCAL_YEAR_END_DATE | DATE | | DIM_TIME | FISCAL_YEAR_START_DATE | DATE | | DIM_TIME | FISCAL_QUARTER_NUMBER_IN_YEAR | INTEGER | | DIM_TIME | FISCAL_QUARTER_END_DATE | DATE | | DIM_TIME | FISCAL_QUARTER_START_DATE | DATE | | DIM_TIME | FISCAL_MONTH_NUMBER_IN_YEAR | INTEGER | | DIM_TIME | FISCAL_MONTH_NUMBER_IN_QUARTER | INTEGER | | DIM_TIME | FISCAL_MONTH_END_DATE | DATE | | DIM_TIME | FISCAL_MONTH_START_DATE | DATE | | DIM_TIME | FISCAL_WEEK_NUMBER_IN_YEAR | INTEGER | | DIM_TIME | FISCAL_WEEK_NUMBER_IN_QUARTER | INTEGER | | DIM_TIME | FISCAL_WEEK_NUMBER_IN_MONTH | INTEGER | | DIM_TIME | FISCAL_WEEK_END_DATE | DATE | | DIM_TIME | FISCAL_WEEK_START_DATE | DATE | | DIM_TIME | FISCAL_DAY_NUMBER_IN_YEAR | INTEGER | | DIM_TIME | FISCAL_DAY_NUMBER_IN_QUARTER | INTEGER | | DIM_TIME | FISCAL_YEAR | INTEGER | | DIM_TIME | YEAR_END_DATE | DATE | | DIM_TIME | YEAR_START_DATE | DATE | | DIM_TIME | QUARTER_END_DATE | DATE | | DIM_TIME | QUARTER_START_DATE | DATE | | DIM_TIME | MONTH_END_DATE | DATE | | DIM_TIME | MONTH_START_DATE | DATE | | DIM_TIME | WEEK_END_DATE | DATE | | DIM_TIME | WEEK_START_DATE | DATE | | DIM_TIME | CALENDAR_QUARTER | VARCHAR | | DIM_TIME | YR | INTEGER | | DIM_TIME | QUARTER | INTEGER | | DIM_TIME | MONTH_NUMBER_OVERALL | INTEGER | | DIM_TIME | MONTH_NUMBER_IN_YEAR | INTEGER | | DIM_TIME | MONTH_NUMBER_IN_QUARTER | INTEGER | | DIM_TIME | MONTH_NAME | VARCHAR | | DIM_TIME | WEEK_NUMBER_OVERALL | INTEGER | | DIM_TIME | WEEK_NUMBER_IN_YEAR | INTEGER | | DIM_TIME | WEEK_NUMBER_IN_QUARTER | INTEGER | | DIM_TIME | WEEK_NUMBER_IN_MONTH | INTEGER | | DIM_TIME | DAY_FROM_JULIAN | INTEGER | | DIM_TIME | DAY_NUMBER_OVERALL | INTEGER | | DIM_TIME | DAY_NUMBER_IN_YEAR | INTEGER | | DIM_TIME | DAY_NUMBER_IN_QUARTER | INTEGER | | DIM_TIME | DAY_NUMBER_IN_MONTH | INTEGER | | DIM_TIME | DAY_NUMBER_IN_WEEK | INTEGER | | DIM_TIME | WEEKEND | VARCHAR | | DIM_TIME | DAY_OF_WEEK | VARCHAR | | DIM_TIME | TIME_KEY | DATE | | DIM_TIME | TIME_KEY_SEQ | INTEGER | +-------------+---------------------------------+-----------+ -- Let's look at a few rows select time_key_seq, time_key, yr, month_number_in_year, fiscal_year , fiscal_month_number_in_year from dim_time; +---------------+-------------+-------+-----------------------+--------------+------------------------------+ | TIME_KEY_SEQ | TIME_KEY | YR | MONTH_NUMBER_IN_YEAR | FISCAL_YEAR | FISCAL_MONTH_NUMBER_IN_YEAR | +---------------+-------------+-------+-----------------------+--------------+------------------------------+ | 1 | 2000-01-01 | 2000 | 1 | 2000 | 11 | | 2 | 2000-01-02 | 2000 | 1 | 2000 | 11 | | 3 | 2000-01-03 | 2000 | 1 | 2000 | 11 | | 4 | 2000-01-04 | 2000 | 1 | 2000 | 11 | | 5 | 2000-01-05 | 2000 | 1 | 2000 | 11 | | 6 | 2000-01-06 | 2000 | 1 | 2000 | 11 | | 7 | 2000-01-07 | 2000 | 1 | 2000 | 11 | | 8 | 2000-01-08 | 2000 | 1 | 2000 | 11 | | 9 | 2000-01-09 | 2000 | 1 | 2000 | 11 | | 10 | 2000-01-10 | 2000 | 1 | 2000 | 11 | +---------------+-------------+-------+-----------------------+--------------+------------------------------+

Generating the Time Dimension is accomplished using DynamoDBs ability to include Java based UDF Table Functions. Table functions are really powerful – they allow a BI developer to write custom functions that output a “table” that can be queried like ANY OTHER TABLE (mostly). Check out the wiki page FarragoUdx if your interested.

And of course: download LucidDB and give it a whirl!

NOTE: To be candid, doing it as a view isn’t the best approach. For anything beyond tiny (5 million +) we should actually create the table, and do an INSERT INTO SELECT * FROM TABLE(fiscal_time_dimension).

Instant Relief from MySQL Reporting Queries: Incremental Updates

Yesterday, I covered how you can do an initial “replication” of data from MySQL to DynamoDB and how this can improve performance, and save storage space. The follow on question becomes:

That’s Great Nick. But how do I do keep my data up to date?

We’ve got data in our Airline Performance dataset through 31-DEC-2007. I loaded 1 year, all of 2007, for the previous example. What happens when the FAA publishes their 2008 January results, and we’ve loaded the new months worth of data into MySQL?

MySQL:

select count(*) from otp.ontime; 8061223

select count(*) from ontime where FlightDate > ‘2007-12-31’; 605765

select count(*) from ontime where FlightDate <= ‘2007-12-31’; 7455458

DynamoDB:

select count(*) from FASTER.”ontime”; 7455458

So, we’ve added approximately 600k new records to our source system that we don’t have in our reporting system. How do we incrementally insert these records and get just the 600k new rows into our DynamoDB reporting instance?

Easy Easy Easy.

We’ve already done all the work, all we have to do is simply get records we haven’t processed yet! Should take just a few minutes to get our current table “up to date” with the one over in MySQL.

DynamoDB:

select max(“FlightDate”) from FASTER.”ontime”; 2007-12-31

insert into FASTER.”ontime” select * from MYSQL_SOURCE.”ontime” where “FlightDate” > DATE ‘2007-12-31’; 605765

In other words, let’s select from MySQL any records whose date is beyond what we have currently (2007-12-31).

select count(*) from FASTER.”ontime”; 8061223

select count(*) from FASTER.”ontime” where “FlightDate” > DATE ‘2007-12-31’; 605765

MySQL:

While the DynamoDB INSERT statement was running, the following SQL was being run on MySQL.

show processlist shows a SQL session with the following SQL:

SELECT * FROM `ontime` WHERE `FlightDate` > DATE ‘2007-12-31’;

A single SQL statement (insert into select * from table where date > last time) has you up to date for reporting! Long term we may look to work with Tungsten to be able to keep our data up to date using replication bin log records but for now, this simple pull based approach.

Instant Relief from Slow MySQL Reporting Queries using DynamoDB

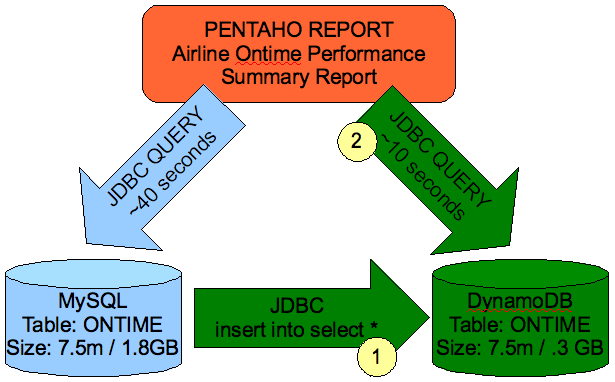

Here’s the scenario. You’ve got a table in MySQL for reporting that has a few million rows, and is denormalized for reporting. You’ve got a Pentaho Report that is querying this MySQL table. You have two problems with the current report.

- Your users are complaining that the query is slow, and they have to wait around for longer than they’d like to see their report. (approx 40s)

- Your DBAs are cranky because they see the size of this table is getting bigger. (approx 1.8GB)

MySQL is fundamentally designed to be an OLTP database and while it does a fantastic job at that, its data warehouse features were built as “bolt on” additions. Can it be used for BI? Absolutely, I’ve used it a many customer sites. Does DynamoDB provide a better set of features/capabilities for doing BI? We think so! Are they both 100% open source? You bet;why not choose the right tool for the right job then?

DynamoDB (aka LucidDB) is a “purpose built for BI” database. What does that mean? Well, I’ll be blogging about a lot of features that speak to our philosophy of a complete “BI Database” not just a fast one. One of the features that makes LucidDB complete, and not just a drag racer, is its ability to connect to remote data sources via JDBC and retrieve data. If you’re doing simple table replications, you don’t have to use an ETL tool, or do export or imports, or LOAD DATA INFILEs, etc. Our ability to connect to remote databases and access them as “remote tables” makes retrieving data into DynamoDB as easy as “insert into mytable select * from remote_table.”

Back to our original issue with our current MySQL

Our report is slow, and our database is big. How slow? Well, not really that bad, but at about 40s per query run that’s enough to tempt your business analyst to go fetch a coffee instead of continuing his work. How big? Well, not really that big, but at about 1.8GB it’s starting to get non trivial in terms of tuning the I/O etc.

Our goal is to improve both using DynamoDB; we’ll leave MySQL as our main OLTP application. We’re not trying to replace it – in fact, we’ll embrace MySQL as the system of record and simply “slurp/report” off this table in a separate reporting environment.

It’s a two step process.

- Connect from DynamoDB to MySQL using a JDBC connector, access the remote table, and draw over the data using a simple INSERT statement.

- Change our Pentaho Report to use the DynamoDB JDBC connector instead of MySQL.

Our Pentaho Report is based on the following SQL

SELECT t.Carrier as “CARRIER”,

c as “C”, c2 as “C2”, c*1000/c2 as “C3” FROM

(SELECT Carrier, count(Carrier) AS c FROM ontime

WHERE DepDelay>10 GROUP BY Carrier) t JOIN

(SELECT Carrier, count(Carrier) AS c2 FROM ontime

GROUP BY Carrier) t2 ON (t.Carrier=t2.Carrier) ORDER BY c3 DESC;

This takes approximately 40s to run on MySQL database running the same machine.

Step 1: Connect, and load the data into our DynamoDB table.

— Create DynamoDB reporting table first

create schema faster;create table faster.”ontime” (

“Year” int,

“Quarter” tinyint ,

“Month” tinyint ,

….. Abbreviated for Brevity ….

“Div5TailNum” varchar(10)

);— Get access the MySQL table OnTime in the OTP schema on host localhost

create schema MYSQL_SOURCE;

set schema ‘MYSQL_SOURCE’;CREATE SERVER MYSQL_REMOTE_SOURCE FOREIGN DATA WRAPPER

sys_jdbc OPTIONS (

driver_class ‘com.mysql.jdbc.Driver’,

url ‘jdbc:mysql://localhost/otp?useCursorFetch=true’,

user_name ‘root’,

password ‘easy’,

fetch_size ‘1000’,

table_types ‘TABLE’,

schema_name ‘otp’);import foreign schema OTP from server MYSQL_REMOTE_SOURCE into MYSQL_SOURCE;

— Load DynamoDB table from MySQL database directly

insert into FASTER.”ontime” select * from MYSQL_SOURCE.”ontime”;Notice that last statement. You don’t have to export to intermediate files, or use an ETL tool (not that that’s bad, I’m a big fan of ETL tools!). You can use good old fashioned SQL to get data from a remote database into DynamoDB.

Step 2: Change the Pentaho Report to use the new connection.

We open up our report and change our connection from MySQL

NOTE: Until we finish our QA’ed builds we’re using LucidDB driver instead of DynamoDB but they are, one and the same.

We make some minor adjustment to the SQL (quoting some tables/etc) and rerun our query and Voila, our report runs in 10s down from 40s, an improvement of 400%.

How about storage? Our storage report shows that DynamoDB is using only .3 GB to store the same 7 Million records as compared to MySQL at 1.8GB, or 1/6 of the storage.

Not a bad investment of a few minutes of time, I’d say. DynamoDB (LucidDB) takes just a few minutes to install, and because of its focus on BI you should find things like retrieving data from remote data sources easy, and effective. Let’s be truthful here as well; once you speed up a report by 400% and reduce its storage by 6x your boss will be calling you a dynamo.

Notes: Full set of scripts posted here: mysql_relief.zip. Original queries and dataset from Vadim at MySQLPerformanceBlog.

DynamoBI: website? bits?

Well, what a soft launch it has been. 🙂

Some people have asked:

When are you going to get a website? Errr…. Soon! We soft launched a bit early, due to some “leaking information” but figured heck, it’s open source let’s let it all out. Soon enough, I swear!

Where can I download DynamoDB? Errr… you can’t yet cause we haven’t finished our build/QA/certification process.

However, since DynamoDB is the alter ego business suit wearing brother of LucidDB, just download the 0.9.2 release if you want to get a sense of what DynamoDB is.

There are 3 built binaries (Linux 32, Linux 64, and Windows 32): http://sourceforge.net/projects/luciddb/files/luciddb/luciddb-0.9.2/ and you can find installation instructions here.

DynamoDB will have the same core database, etc. So, from a raw feature/function perspective what you download and see with LucidDB will be what you get in DynamoDB. DynamoDB will have an administration UI to make things like setting up foreign servers, managing users, etc easier. And lots of other cool new features on the longer term roadmap, which if when we get a website would be a great place for that to go!

Until then, use the open source project, LucidDB. I think you’ll like it!

OpenSQLCamp 2009 with LucidDB

LucidDB is now a sponsor OpenSQLCamp 2009, courtesy of DynamoBI which doesn’t have its own logo yet! 🙂

I’ll be attending representing the LucidDB camp, since Mr. John Sichi will be running a marathon (1/2?) that weekend. Let me know if you’ll be there!

LucidDB: DynamoBI is running with it

I can think of no better analogy than that of a multi leg race. You know, the races where one sprinter runs as fast as they can, before passing the baton to the next sprinter.

First it was Broadbase.

Second it was LucidEra.

Third it was Eigenbase / LucidEra / SQLstream (joint development w/ Eigenbase).

Having purchased commercial rights from LucidEra it’s ours to run with now, alongside Eigenbase and SQLstream.

LucidDB has been described as the “best database no one ever told you about.” That stops today (the telling part, not the best part). Dynamo Business Intelligence Corp will take this great technology to a wider audience and we’ll be telling EVERYONE about it!

Over time, the exceptional features of this open source project will come to light (column store, bit map idxs, drop in java based user plugins, transparent remote JDBC data access, etc). I think it is important to acknowledge how LucidDB arrived to where it is today.

LucidDB is built by smart smart people (people wayyyy smarter than me!). People who’ve written parallel execution engines in Oracle. People who’ve developed Bitmap IDX implementations and helped file those patents. The heritage of LucidDB starts at Broadbase; LucidEra purchased it and brought it to Eigenbase. Eigenbase, and it’s sponsoring companies, have most claim to its current state. Their stewardship and ongoing evolution of the project is a testament to their talents and commitment to open source development. When you pick up LucidDB/DynamoDB and get your first “Ahhhh Cool! 10x Faster than my current database” you have LucidEra/SQLstream/Eigenbase devs to thank. John V. Sichi (lead and main project sponsor), Tai Tran, Julian Hyde, Rushan Chen, Zelaine Fong, Sunny Choi, Steve, Marc, Richard, Hunter, Edan, Damian, Boris, Benny, Stephan, Oscar, …. and the list goes on and on and on. Some of these people will be helping (in small and big ways) with the new company which is great for customers knowing that the people that wrote this stuff will be helping them be successful!

What’s the plan?

- Open Source.

Lots of it. Any readers of this blog, or who know me in general, will know I’m a “burn the boats,” open source kind of guy. We’ll be creating some new projects to make using the features/functions already in LucidDB easier. We’ll also be adding new features, which will make their way back into the LucidDB mainline. - Commercial in Name Only.

Mainline DBMS enhancements and development continue, and will continue to be, in LucidDB (Eigenbase). New projects will be available under an OSI approved license. DynamoDB is the prepackaged, assembled, UI included distribution built for customers/evaluators that we’ll offer support on. Should be as easy as we can possibly make it to evaluate, purchase, and use. - In Progress.

We’ve let the announcement ahead of having our website built, or having completed our own DynamoDB QA’ed build. Our open source roots guide us to an “early and often” approach and we’re taking that approach here. Be patient with us as we roll out the business bit by bit over the next few months. Our #1 priority: establish our support/build/qa infrastructure and get an already great piece of software into hands of people who can benefit from it. Hint: If you’ve ever done a star schema on MySQL you need to talk to us!

One thing I am personally looking forward to is getting to work even more extensively with everyone involved at Eigenbase, including the very talented devs at SQLstream (who produce the best real time analytics/integration engine available).

Feel free to join up in taking LucidDB to a whole new level: Download LucidDB and give it a go yourself, since we just released a new version (0.9.2) yesterday! I believe, like others have already mentioned, adding a bit of commercial support behind an already great piece of software is a winning combination!

Drop a line on through to me if you’re interested in getting involved early on (as a charter customer, developer, user, etc). ngoodman at bayontechnologies (with the .COM).